7 dangerous mistakes in C#/.NET that are easy to make

C# is a great language, and the .NET Framework is pretty damn good too. C#’s strong typing reduces the amount of bugs that you’ll create when compared to other languages. Plus its general common sense design helps a lot, when compared to something like JavaScript (where true is false). Nevertheless, in every language there are traps that easy to fall to and misconceptions on the expected behavior of the language and framework, and I’ll detail some of these below

1. Not understanding deferred execution

I imagine seasoned developers are well aware of this .NET feature, but it can really bite developers who are unaware. In short, methods/statements that return IEnumerable<T> and yield each result are not executed on the line of code that actually calls it – they are executed when the resulting collection is accessed in some way*. Note that most LINQ statements will end up ‘yielding’ its results.

As an example, see the below unapologetically contrived unit test

[TestMethod]

[ExpectedException(typeof(ArgumentNullException))]

public void Ensure_Null_Exception_Is_Thrown()

{

var result = RepeatString5Times(null);

}

[TestMethod]

[ExpectedException(typeof(InvalidOperationException))]

public void Ensure_Invalid_Operation_Exception_Is_Thrown()

{

var result = RepeatString5Times("test");

var firstItem = result.First();

}

private IEnumerable<string> RepeatString5Times(string toRepeat)

{

if (toRepeat == null)

throw new ArgumentNullException(nameof(toRepeat));

for (int i = 0; i < 5; i++)

{

if (i == 3)

throw new InvalidOperationException("3 is a horrible number");

yield return $"{toRepeat} - {i}";

}

}

Both of these tests will fail. The first test will fail because the result is never accessed, so the body of the method is never called. The second test will fail for a different, slightly more complicated reason. We now retrieve the first result of our method call, to ensure that the method is actually being accessed. However, the deferred execution mechanism will exit from the method when it needs to – so in this case, we only want the first one, so once we yield the the first iteration the method halts its execution (so i == 3 is never true)

Deferred execution is actually a great feature, especially because it allows you to chain LINQ queries easily, only retrieving the data once your query is ready to be accessed.

2. Assuming the Dictionary type keeps items in the same order as you add them

This is a particularly nasty one, and I’m pretty sure I have some code out there that makes this assumption. When you add items to a List<T>, the items are kept in the same order as you add them – great. Sometimes you need to have another object associated with an item in a list, and the obvious answer is to use a Dictionary<TKey,TValue>, which allows you to specify an associated value to a key.

You can then iterate through the dictionary using foreach, and most of the time it will behave as would expect – you’ll be accessing the items in the same order as they are added to the dictionary. However, this behavior is undefined – i.e. is a happy coincidence rather than something you should assume and expect. This is explained in Microsoft’s documentation but I imagine not a lot of people will be looking at this page.

To illustrate, in the below example the output is

third

second

var dict = new Dictionary<string, object>();

dict.Add("first", new object());

dict.Add("second", new object());

dict.Remove("first");

dict.Add("third", new object());

foreach (var entry in dict)

{

Console.WriteLine(entry.Key);

}

Don’t believe me? Try it online here

3. Not considering thread safety

Multithreading is great (citation needed), there can be some huge performance improvements to your application if implemented correctly. However, once you introduce multithreading you have to be very, very careful with any objects you’ll be modifying because you’ll start to encounter seemingly random bugs unless you’re careful.

Simply put, a lot of common classes in the .NET library are not thread safe – this means that Microsoft provide no guarantees that you’re able to use the given class in parallel using multiple threads. This wouldn’t be much of a problem if you were able to discover any problems immediately, but the nature of multithreading means that any problems that arise are very volatile and unpredictable – it’s likely no two executions will yield the same result.

As an example, take this block of code that uses the humble, but not thread safe, List<T>

var items = new List<int>();

var tasks = new List<Task>();

for (int i = 0; i < 5; i++)

{

tasks.Add(Task.Run(() => {

for (int k = 0; k < 10000; k++)

{

items.Add(i);

}

}));

}

Task.WaitAll(tasks.ToArray());

Console.WriteLine(items.Count);

So we’re adding numbers from 0-4 to a list 10000 times each, which means the list should contain 50000 items. Does it? Well, there’s a small chance it will – but below are my results after running 5 times

28191

23536

44346

40007

40476

You can try it online yourself here

Essentially, this is because the Add method isn’t atomic, which essentially means that a thread can ‘interrupt’ the method can end up resizing the array while another thread is in the process of adding, or adding at the same index as another thread. A couple of times I was presented with an IndexOutOfRange exception, likely because the array was being resized while I was adding to it. So what we do we here? We could use the ‘lock’ keyword to ensure only one thread can Add to the list at a time, but this can significantly impact performance. Microsoft, being the helpful folk they are, provide some awesome collections that are thread safe, and highly optimised for performance. I’ve published an article describing how you can use these here

4. Overuse of lazy loading in LINQ

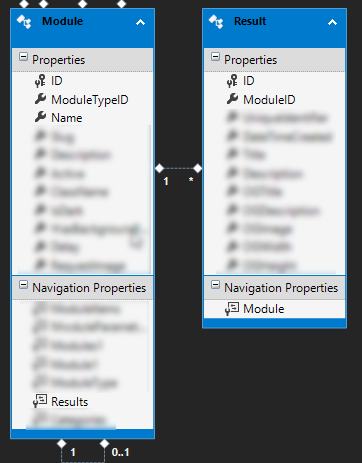

Lazy loading is a great feature of both LINQ to SQL and LINQ to Entities (Entity Framework) that allows related table rows to be loaded on demand. In one of my other projects, I have a table called Modules and a table called Results, with a one to many relationship (a module can have many results)

When I want to retrieve a certain module, I would certainly not want Entity Framework to bring back every ‘Result’ that the Module has! So, it’s clever enough that it would only execute the query to retrieve the results when I need to. So the below code would execute 2 queries – one to get the module, and another to get the results (for each module)

using (var db = new DBEntities())

{

var modules = db.Modules;

foreach (var module in modules)

{

var moduleType = module.Results;

//Do something with module here

}

}

However, what if I have hundreds of modules? That means a separate SQL query to get the Results records will execute for each Module! Clearly this is going to put a strain on the server and is going to considerably slow down your application. In Entity Framework, the answer is very simple – you can tell it to include a certain result set in your query. See below modified code, where there will only be one SQL query executed, which will include every module, and every result for that module (flattened to one query, which Entity Framework intelligently maps to your model)

using (var db = new DBEntities())

{

var modules = db.Modules.Include(b => b.Results);

foreach (var module in modules)

{

var moduleType = module.Results;

//Do something with module here

}

}

5. Not understanding how LINQ to SQL/Entity Frameworks translates queries

While I’m on the subject of LINQ, I feel it’s worth mentioning how different your code will execute if it’s inside a LINQ query. As a top level explanation, all of your code inside a LINQ query is translated to SQL using expressions – seems obvious, but it’s very, very easy to forgot the context your in and end up introducing some issues to your codebase. I’ve made the below list to describe some of the common roadblocks you may come into.

Most method calls will not work

So imagine you have the below query, to split the name of all Modules by the colon character and grab the second portion.

var modules = from m in db.Modules

select m.Name.Split(':')[1];

This will throw an exception in most LINQ providers – there is no SQL translation for the ‘Split’ method, Certain methods may be supported, such as adding days to a date, but it all depends on your provider.

The ones that do work may produce unexpected results…

Take the below LINQ statement (I have no idea why you would do this in practice, but please just pretend it’s a sensible query)

int modules = db.Modules.Sum(a => a.ID);

If you have any Module rows in the table, that will give you the sum of the IDs. Sounds about right! But what if you execute it using LINQ to Objects instead? We can do this by converting the Modules collection to a list before we execute our Sum method

int modules = db.Modules.ToList().Sum(a => a.ID);

Shock, horror – this does the exact same thing! However, what if you had no rows in the Modules table? The LINQ to Objects would return 0, and the Entity Framework/LINQ to SQL version will throw an InvalidOperationException saying that it cannot convert ‘int?’ to ‘int’…not good. This is because when you perform a SUM in SQL on an empty set, you get NULL returned instead of 0 – hence it tries to return a nullable int instead. There’s some guidance on how fix it here, if you encounter this problem.

Knowing when to just use plain old SQL

If you’re performing an extraordinarily complicated query, then your translated query may end up looking like something that has been vomited, swallowed again and re-vomitted. Unfortunately I don’t have any examples to hand, but based on my anecdotal evidence, it seems to really like using nested derived tables, which makes maintenance a nightmare.

Also, if you come across any performance bottlenecks, you’re going to have a hard time improving them as you do not have direct control over the generated SQL. Either do it in SQL, or delegate it to a DBA if you have one if your company!

6. Rounding incorrectly

Now for something a bit simpler than the previous points, but one that I used to always forget about and end up with some nasty bugs (and if it involves financial values, prefer for an angry financial director/CEO).

The .NET framework includes a lovely static method in the Math class called Round, which takes a numeric value and rounds to a given decimal places. Works perfectly most of the time, but what about when you are trying to round 2.25 to 1dp? I imagine you probably expect it to round to 2.3 – that’s why we all taught, right? Well, it turns out that .NET uses bankers rounding, which would round the given example to 2.2! This is because bankers rounding rounds to the nearest even number if the number is at the ‘midpoint’. Luckily, this can be overridden in the Math.Round method with ease

Math.Round(2.25,1, MidpointRounding.AwayFromZero)

7. The horrible ‘DBNull’ class

This may bring back painful memories for some – ORM’s hide this nastiness from us, but if you delve into the world of raw ADO.NET (SqlDataReader’s and the like) you’ll come across the DBNull.Value value.

I’m not 100% sure of the reason why NULL values from the database are handled like this (please comment below if you know!), but Microsoft chose to represent them with a special type, DBNull (with the static Value field). I can think of one advantage of this – you won’t get any nasty NullReferenceException exceptions when accessing a database field that is NULL. However, not only do you have to maintain a secondary way of checking for NULL values (which can easily be forgotten which would lead to major bugs), but you lose any of the lovely C# language features that help dealing with nulls. What could be as easy as

reader.GetString(0) ?? "NULL";

becomes…

reader.GetString(0) != DBNull.Value ? reader.GetString(0) : "NULL";

Yuck.

Footnotes

These are just some of the unusual ‘gotchas’ I’ve come across in .NET – if you know any more, I’d love to hear from you below

> I’m not 100% sure of the reason why NULL values from the database are handled like this (please comment below if you know!), but Microsoft chose to represent them with a special type,

That’s the way it was done in Visual Basic in the pre-.NET era. VB 6 doesn’t have unified object model (everything inheriting from System.Object) or the concept of a “null”. There is `Nothing`, but that’s more like C#’s `default` than a true null.

So in VB 6 and ADO (probably DAO as well, I can’t remember), you have a value called DBNull to represent a database null,

The difference may seem academic, but DB nulls and null nulls have different semantics. Specifically I’m talking about strings. In VB, a null string is equal to an empty string. There’s no way to separate the two concepts in VB 6 and in VB 7+ (i.e. VB.NET) the different is still somewhat hidden.

Now I can’t say exactly why DBNull was carried into ADO.NET. Maybe backwards compatibility, maybe conceptual purity, maybe to deal with VB’s string handling. But I hope this story at least was interesting.

As for DBNull, a tip is to use DataAdapter rather than icky low level DataReaders in case your result set is not huge. Then, after adapter.Fill(table), use the DataTable.Field() convenience method for strong typing and DBNull-awareness. Even supports nullable types!

https://docs.microsoft.com/en-us/dotnet/api/system.data.datarowextensions.field?view=netframework-4.8

Back in the old c# days there wasn’t a thing such as nullable ints, floats, dates and so on, so there was no way you could represent a db null value using those data types.

Great read! Thank you for some great work!

Great read!! Thank you so much!